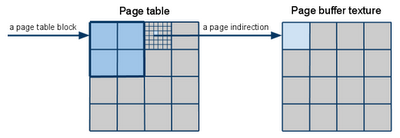

I've been playing with the thought that the page table of a virtual texture could itself have a higher level indirection table, allowing us to create much bigger virtual textures by dynamically allocating huge virtual texture blocks. This would make geometry streaming simpler with virtual texturing since you wouldn't need to have the entire worlds' page table in memory at once and can help avoid precision issues when page tables get too big.

The texturing on geometry would not be able to cross the edges of these higher level blocks, but that would be fine because the dimensions of these blocks would basically still be really big, much bigger than you'd ever want polygons to be. Also, we only really care about the actual texture pages that we store on disk and loading them quickly enough, if page tables have huge empty spaces, it doesn't matter because they don't actually take any space on disk, or any substantial space in memory. So if we have lots and lots of additional page table space, we gain a lot of freedom in how we built and update our virtual texture, and how we place our model/level textures within our virtual texture. We don't need to pack it as tightly anymore and we could consider using other metrics to place our textures in our virtual texture, for example proximity of the geometry on which the texture lies.

Obviously we do not want to have an extra indirection in our pixel/fragment shaders, which would be terribly wasteful, but we could easily use our vertex shaders for that, considering that geometry wouldn't be allowed to cross these higher level blocks. Another way could be that suppose you can load X page table blocks in memory at the same time, and each page table block would have a fixed position within the page table then you wouldn't have to use a vertex shader either. But you'd have to guarantee that no two page table blocks with the same position would ever be loaded, which might be fine if you have enough block positions.

On another note I've been looking at Assimp (I know what you're thinking, but no, that stands for Open Asset Import Library, you pervert) and it's pretty cool. I couldn't get the .net library to compile, which is a shame, but I've been using the C compiled command line tool to convert all the Lightwave files in quake 4 into an easily parsable XML format, which I then parse and use in my, oh so neglected, virtual texture experiment. It really makes everything feel more like an actual level, instead of random geometry with big gaping holes in it ;) Along the way I discovered that somehow my geometry was swizzled across an axis compared to quake 4, so I fixed that. In the process of getting all the geometry in the level I've had to parse all the skin, guide, mtt (material type), and def (entity definition) files (next to all the mtr (material), map and proc files that already was parsing before), which was a big adventure in figuring our how the id-tech 4 technology worked from a data point of view. Certainly gave me renewed respect for it's flexibility, despite the engine's age.

One of these days I'm going to put support for all the texture and door animations in my little demo (well, it's actually starting to become a little engine of sorts), and put in proper support for texture blending. And brute force page VSD as a pre-process (to determine if a page mip can actually ever be visible). And fast texture compression. And lighting obviously. And physics. And maybe it's not really an engine after all :)