I didn't have much time lately (sick, other higher priority stuff), so I just quickly tried out some ideas. Well, quick is a relative term since my current lighting calculation implementation isn't exactly 'quick'. Every bounce takes about 10-20 min, mostly caused by the slow read-backs from the graphics card. There's

plenty of room for improvement there.

|

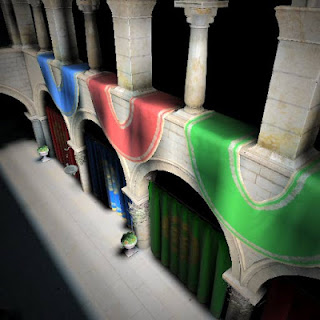

| The Crytek sponza scene I use for testing |

There are basically 2 ideas that I tried. First of all, until now I was calculating the samples by simply rendering the scene in 6 different directions (up,down,left,right,front,back) and using those as the sample directions. I quickly realized this is false. For example, if you'll only see any light when you render in the up direction, all the light to the sides would be completely black.

|

| Sampling without hemicube |

So I realized it would be better to use a

hemicube to calculate the lighting for each direction, and as you can see you get a much better result after even the very first bounce (keep in mind that samples inside the geometry are completely black here):

|

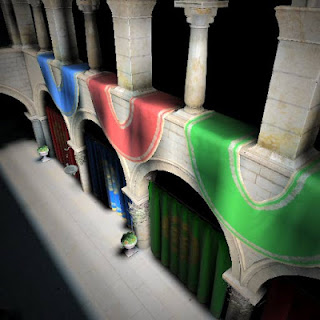

| Sampling with hemicube |

And this makes perfect sense when you think about it. After all, for every pixel in the scene, we're trying to pre-calculate an approximation to the integration of all the light coming from every direction, not some sort of weird axis aligned lighting. This improvement caused some nice new visual effects after only a couple of bounces:

|

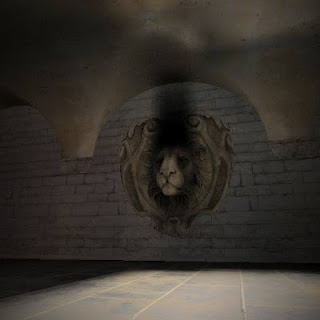

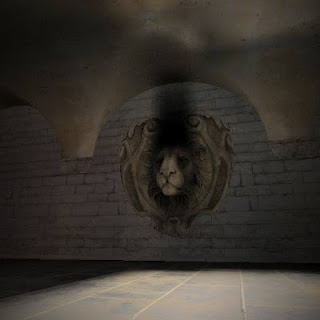

| Indirect lighting casting a shadow of the lions' face |

|

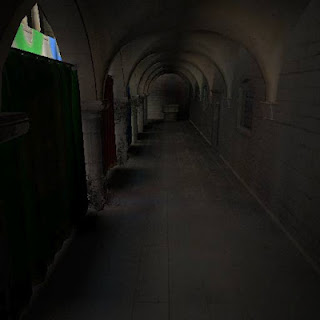

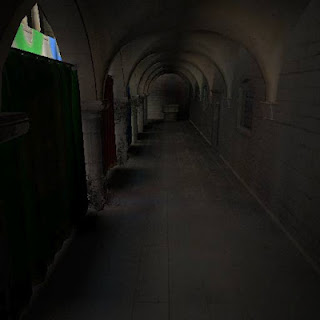

| Soft shadows cast by the sky |

A cool thing about the whole hemicube thing is, is that I already had all the data I needed to determine the hemicube. I just had to integrate each quarter of each cube face separately (with the appropriate weights) , which gives us 4 colors for each cube face, and then finally for each hemisphere we take the right 12 quarters and integrate those together to get our final directional color.

Another thing I've tried was super-sampling (basically rendering lots of samples around a certain location and averaging them) for all the samples that either had a strong discontinuity, or those that where completely inside geometry and right next to a sample which wasn't. This kinda worked (at least for the occluded samples), although not as well as I hoped:

|

| Some sampling aliasing |

I might try weighting the samples based on their location, but I'm not entirely sure yet what good weights will look like. There are still have some artifacts here and there, I still need to figure out why this is happening. In the end I might try using some sort of blending between the samples to blur out the discontinuities a little.

|

| Some sampling artifacts |

Using some sort of

virtual texturing approach to irradiance volumes might make it possible to more efficiently pack together samples, use different resolutions for different areas, or even add additional samples (borders basically) between samples simply to avoid them from blending into each other. This would take a lot of effort to implement though, so I'm not sure if that's worth it for me.